Did you come up with something better than Quorum in the previous Learning Perl challenge? There’s been some spirited conversations since then and some surprising new information. Continue reading “Learning Perl Challenge: Be better than Quorum (Answer)”

Category: Challenges

Learning Perl Challenge: Be better than Quorum

Sinan Ünür wrote about some click bait that claimed Perl programmers were worse than programmers in a fictional language named Quorum. His post goes through all the experimental and analytic errors, as many of his posts do. Continue reading “Learning Perl Challenge: Be better than Quorum”

Learning Perl Challenge: March Madness

Warren Buffet’s Berkshire Hathway is insuring Quicken Loans’ prize of $1 Billion dollars to someone who picks a perfect March Madness bracket and 20 prizes of $100,000 to the closet brackets. The rules won’t be enumerated until March 3, but so far they haven’t outlawed Garciaparra-ing by pulling a Nandor. If you want people to sit up and notice Perl, winning this contest with a Perl program will get you all the fame you want. You’ll be any job you want, but with $500 million (the present day value single payout), you won’t have to take it. Continue reading “Learning Perl Challenge: March Madness”

Learning Perl Challenge: Remove intermediate directories

I often run into situations where I have directories that contain only one file, a subdirectory, with contain only one file, a subdirectory, and so on for a long chain, until I get to the interesting files. These situations come up when I have only part of a data set so the files that would be in other directories aren’t there, and I find it annoying to deal with these long directory specifications. So, this challenge is to fix that by collapsing those one-entry directories into a single one.

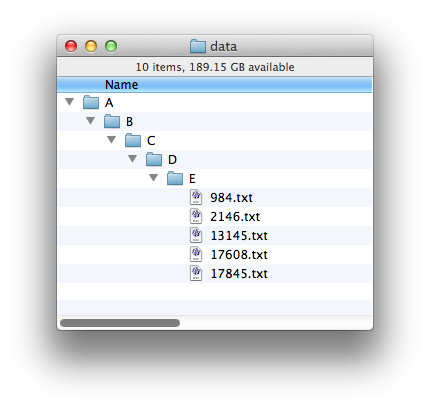

For example, you should take this structure, where you have A/B/C/D/E in a direct line with no other branches:

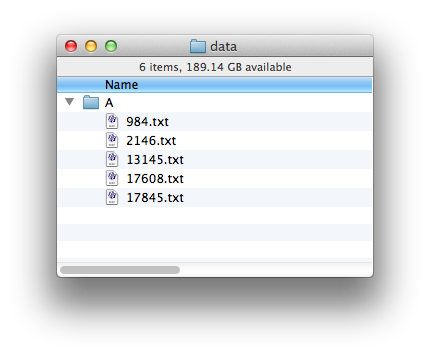

and turn it into this one, with a single directory with the files that were at the end:

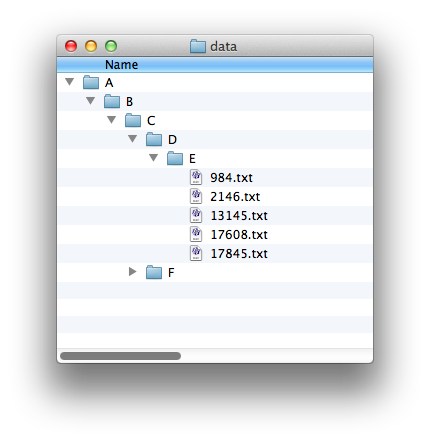

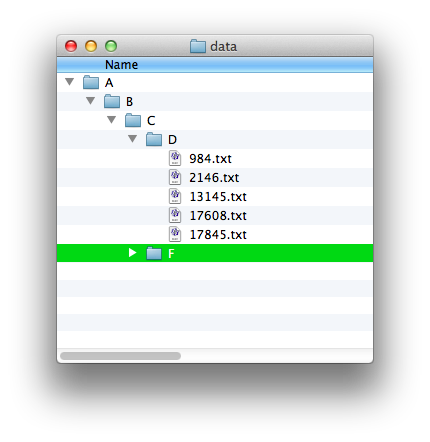

However, you should only moves files up if the directory above it has only one entry (which must be a subdirectory!). In this example, A/B/C has two subdirectories in it:

so the the files in E should only move up into D. Otherwise, the files from the two branches in C would get mixed up with each other.

Learning Perl Challenge: popular history (Answer)

June’s challenge counted the most popular commands from a shell history. Some shells remember the last commands you used so you can start a new session and still have them available. For this exercise, I’ll assume the bash shell.

You setup the history feature by telling your shell to track the history. You want to remember 3,000 previous commands:

HISTFILE=/Users/brian/.bash_history HISTFILESIZE=3000 HISTSIZE=1500

There’s much you can do with your command history, but that’s not what I’m covering here. The bash history cheat sheet explains most of it.

There are two ways you can get at the history. You can run the history command and pipe it to your Perl program as standard input, or you can read the history file (perhaps also piping it at standard input). The shell only writes to the file at the end of the session, so the file doesn’t know about recent commands. Also, each session merely appends to the file. Each session’s history is contiguous, so the file is not necessarily chronological. This doesn’t matter much to the challenge to find the overall popular commands.

Once you get the input, you have to figure out which part is the command. There are several issues there too. The first part of the line might be the command, a path to the command, or some sort of modifier for the command. A command line might have a pipeline of multiple commands or a series of separate commands. Some of the commands might be shell built-ins while others are external programs. Some commands might be user-defined aliases:

tail -f /var/log/system.log /usr/bin/tail/ -f /var/log/system.log sudo vi /etc/groups history | perl -pe 's/\A\s*\d+\s*//' grep ^_x /etc/passwd | cut -d : -f 1,5 | perl -C -Mutf8 -pe 's/:/ → /g' (cd /git/dumbbench; git pull origin master) perldoc -l SQL::Parser | xargs bbedit export HISTFILESIZE=3000 l

This breaks the challenge into three parts, the last of which is basic accumulator stuff that we show Learning Perl for many tasks.

- Get the history

- Extract the commands

- Count and report the results

I’ll cover each of those parts separately as I go through the answers.

Get the history

Most people opened a filehandle on the history file. Some people hard-coded the path while others made it relative to the home directory:

open( FILE, "/Users/me/.bash_history" ); # rm

my $hist_file = "$ENV{HOME}/.bash_history"; # jose

open my $hf, "<", $hist_file;

my $path = $ENV{HOME}; # Dave M

my $history = $path . '/' . '.bash_history';

open( my $f, '<', $history );

open HISTORY, $ENV{'HOME'}.'/.bash_history' # ulric

or die "Cannot open .bash_history: $!";

Daniel Keane was the only person to use zsh, which has a history format with timestamps (one of the issues I noted with the bash history):

my $history_path = "$ENV{'HOME'}/.zhistory";

die "Cannot locate file: $history_path" unless -e $history_path;

open(my $history_fh, '<', $history_path);

Neil Bowers used File::Slurp to get it all at once:

read_file($ENV{'HOME'}.'/.bash_history', chomp => 1);

Anonymous Coward set a default value for the history file but also allows people to override it with a command-line option:

my $histfile = "$ENV{HOME}/.bash_history";

my $position = 0;

my $number = 10;

GetOptions (

'histfile=s' => \$histfile,

'position=i' => \$position,

'number=i' => \$number,

);

A couple of people shelled out to run the history command. WK used history -r to add the history from the history file to the current history, then read the history from the command line:

my @history = qx/$ENV{ SHELL } -i -c "history -r; history"/; # WX

Javier's answer did much of the work in the shell and awk:

my $get_cmds =

qq/$ENV{'SHELL'} -i -c "history -r; history"/

. q/ | awk '{for (i=2; i>NF; i++) printf $i " "; print $NF}'/;

chomp(my @cmds = qx# $get_cmds #);

The two winners for getting the data, however, are the two people who provided one-liners. They used standard input, which means they could handle either a file in @ARGV or a pipe from history:

VINIAN might get a "Useless use of cat Award", something Perl's Randal Schwartz used to hand out. The -a switch (see perlrun splits on whitespace and puts the list in @F:

% cat ~/.bash_history | perl -lane 'if ($F[0] eq "sudo"){$hash{$F[1]}++ } else { $hash{$F[0]}++ }; $count ++; END { @top = map { [ $_, $hash{$_} ] } sort { $hash{$b} <=> $hash{$a} } keys %hash; @max=@top[0..9]; printf("%10s%10d%10.2f%%\n", $_->[0], $_->[1], $_->[1]/$count*100) for @max}'

But, VINIAN's program would work with a file argument, As Chris Fedde uses:

% perl -lanE '$sum{$F[0]}++; END{ say "$_ $sum{$_}" for (reverse sort {$sum{$a} <=> $sum{$b}} keys %sum)}' ~/.bash_history | less

I would think that a better answer to this part would examine the environment variable for HISTFILE, but jose notes that it doesn't work on cygwin. I did not investigate that.

Extract the commands

Extracting the commands is the tough part of the problem, but many people skipped most of that problem. They assumed the first group of non-whitespace was the command, possibly turning an absolute path to its basename.

jose used basename is the command started with a /:

$cmd = basename $cmd if $cmd and $cmd =~ /\//;

You don't really need to check that though. You could just take the basename though, as Dave M did unconditionally:

my $basename = basename( $args[0] );

This ignores something that would be harder to solve; what if two different commands have the same name? For instance, the system perl and a user-installed one might have the same name. Either might be specified as just perl depending on the PATH, and the user-installed one might be a relative path. Most people counted the basename only.

Some people took the commands and checked that they were in the PATH:

for my $p (@paths) {

if ( -e "$p/$basename" ) {

$words{$basename}++;

}

}

No one checked for symbolic links.

WK is the only person who translated aliases:

my %alias = get_aliases_hash();

sub get_aliases_hash {

my @alias = qx/$ENV{'SHELL'} -i -c "alias"/;

return map { m/\Aalias\s+(.+)='(.+)'\s*$/; $1 => $2 } @alias;

}

ulric and WK are the only people who didn't count sudo by skipping it. Here's how ulric did it:

if ($commandline[0] =~ 'sudo') {

shift @commandline;

} # sudo is ignored as a metacommand

I think most people get fair marks for this part, but if I had to choose a winner, I'd go with WK.

Count and report the results

Once you have the commands, most people used the command as a hash key and adding one to the value. There's not too much interesting there.

My Answer

My answer differs substantially only in the way that I examine the command line. I know about the Text::ParseWords module that can break up a line like the shell would. This is, it can break up a line while preserving quoting. I want to handle the shell special separators ; and | except when they are parts of quoted strings. The parse_lines can handle that:

; my $delims = '(?:\s+|\||;)'; parse_line( $delims, 'delimiters', $_ );

The first argument is the regular expression I use to recognize a delimiter outside of a quoted string. I can't rely on whitespace (although at first I tried), but the shell can handle things such as tail -f file|perl -pe '...' where the separator has no whitespace around it.

The second argument, if it is the special value delimiters, returns the delimiter string. I need to use this to recognize the start of new commands on the same line.

My program is long and not very fun, since I limit myself to the material in Learning Perl. Fun things such as splice only show up as "things we didn't cover". It's in the Learning Perl Challenges GitHub repository with my other answers.

As well as keeping track of the commands, I track which ones were modified by other commands that I specify in %modifiers. I didn't handle aliases or basenames like many other people did:

#!perl

use v5.10;

use strict;

use warnings;

use Text::ParseWords;

my %commands;

my %modifieds;

my $N = 10;

my %modifiers = map { $_, 1 } qw( sudo xargs );

my $delims = '(?:\s+|\||;)';

while( <> ) {

my @shellwords =

grep { defined && /\S/ }

parse_line( $delims, 'delimiters', $_ );

next unless @shellwords;

my @start_indices = get_starts( @shellwords );

# go through the shellwords to find the delimiters like ; and |

# one command line can have multiple commands, so find all of

# them.

I: foreach my $i ( 0 .. $#start_indices ) {

my( $start, $end ) = (

$start_indices[$i],

$i < $#start_indices ? $start_indices[$i+1] - 1 : $#shellwords

);

# look through a command group to find the the command

my $modified = 0;

J: foreach my $j ( $start .. $end ) {

next if $shellwords[$j] =~ m/\A$delims\Z/;

if( exists $modifiers{$shellwords[$j]} ) {

$modified = $shellwords[$j];

next;

}

if( $modified ) {

$modifieds{"$modified $shellwords[$j]"}++;

}

$commands{$shellwords[$j]}++;

last J;

}

}

}

say "------ Top commands";

report_top_ten( $N, %commands );

say "------ Top modified commands";

report_top_ten( $N, %modifieds );

sub get_starts {

my @starts = 0;

while ( my( $i, $value ) = each @_ ) {

push @starts, $i if $value =~ /\A$delims\z/;

}

return @starts;

}

sub report_top_ten {

my( $top_count, %hash ) = @_;

my @top_commands = sort { $hash{$b} <=> $hash{$a} } keys %hash;

my $max_width = length $hash{$top_commands[0]};

while( my( $i, $value ) = each @top_commands ) {

last if $i >= $top_count;

printf '%*d %s' . "\n", $max_width, $hash{$top_commands[$i]}, $top_commands[$i];

}

}

And, with that, I find my top commands by reading from standard input and using my environment variable to locat the file:

$ perl history.pl $HISTFILE ------ Top commands 843 make 518 perl 364 ls 343 git 244 cd 156 bb 117 open 68 pwd 51 rm 42 ssh ------ Top modified commands 3 xargs rm 2 xargs bbedit 2 xargs bb 1 xargs stripper 1 sudo cp

The bb is an alias for bbedit, the command-line program to do various things with that GUI editor. The stripper is a program I use to remove end-of-line whitespace. I most often use it with find, which is why it shows up after xargs.

That make comes mostly as make test, and the perl as perl Makefile.PL.